NASA SUITS 2020 - Extended

The opportunity

In support of NASA’s Artemis mission—landing American astronauts safely on the Moon by 2024, how might we design and create spacesuit information displays within mixed reality (MR) environments?

After a successful exit pitch in June 2020 for the 2020 NASA SUITS (Spacesuit User Interface Technologies for Students) challenge (view the submitted project here), the UI/UX team decided to continue improving on the designs while breaking from previous constraints to allow for more creative possibilities.

In support of NASA’s Artemis mission—landing American astronauts safely on the Moon by 2024, the team explored different methods of interaction for efficiency and effectiveness and designed a mixed reality (MR) informatics display system to enhance astronauts' autonomy and safety. We prioritized camera, documentation and navigation functions while focusing on specific use case scenarios to demonstrate our design decisions.

Research

Information gathering

Interviews with 1 former astronaut, 1 NASA scientist, 3 geologists & Dr. Leia Stirling

22 scholarly papers/reports by NASA scientists

Past interviews with 8 astronauts & planetary geologists and scientists

Analysis of VR game’s user interface

Video and articles on voice and gaze-based interactions

Findings

Prioritize safety and intent. There are limited consumables (oxygen, water, battery) and every second count

Note-taking needs to be fast, “(it) shouldn’t take longer to take note than to speak about it”

Graphical documentation of the sample and environment is important

Collecting unique and different samples is valuable

The resources astronauts have during an EVA are “MCC whispering in their ear,” their crewmate and a cuff note

The further astronauts are away from Earth, the greater the communication latency

Working long hours in the suit causes fatigue (EVA’s can go up to 8 hours)

EVA environments are usually high in color contrast

The navigation systems are complex and still in development

Design process

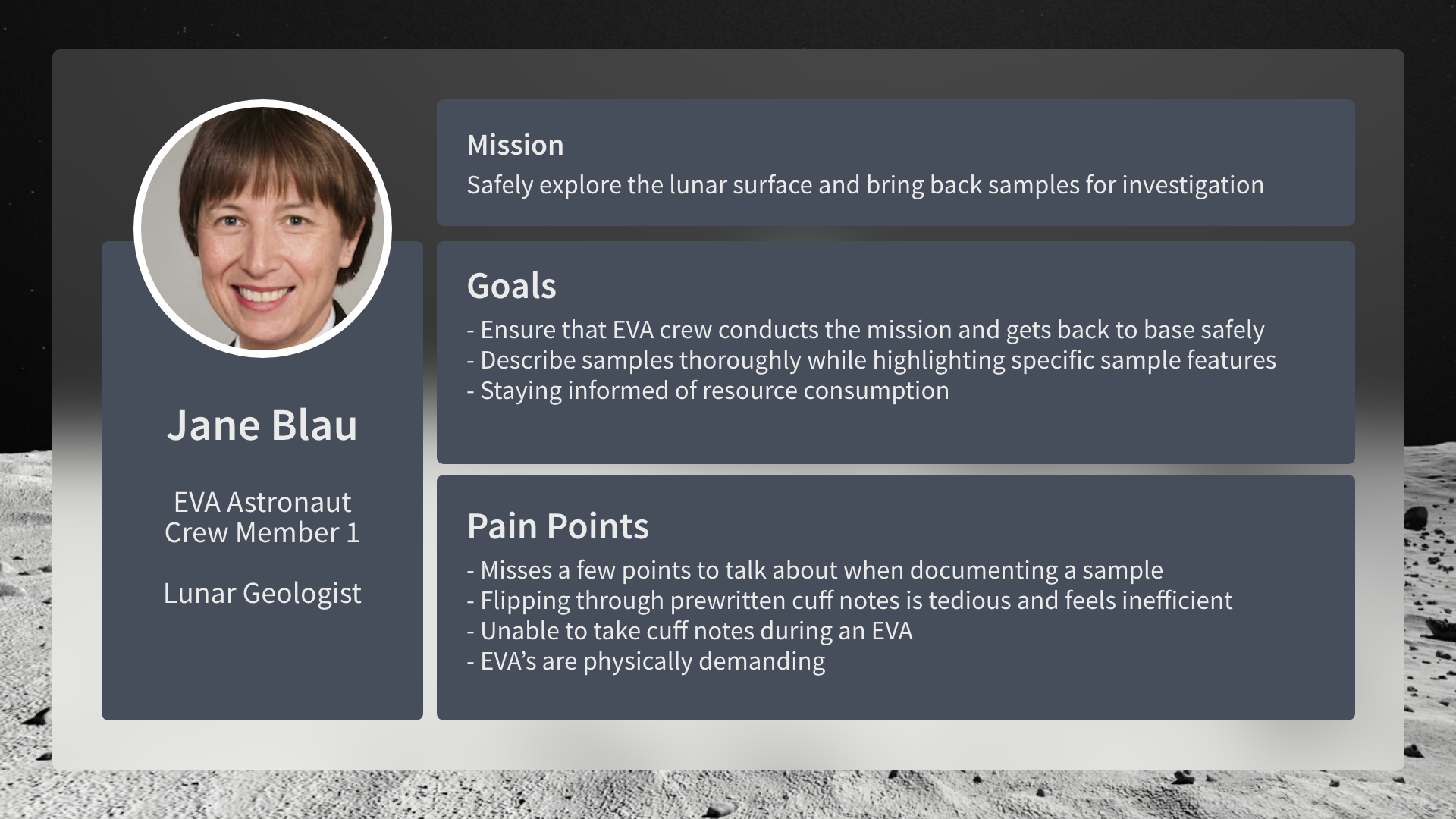

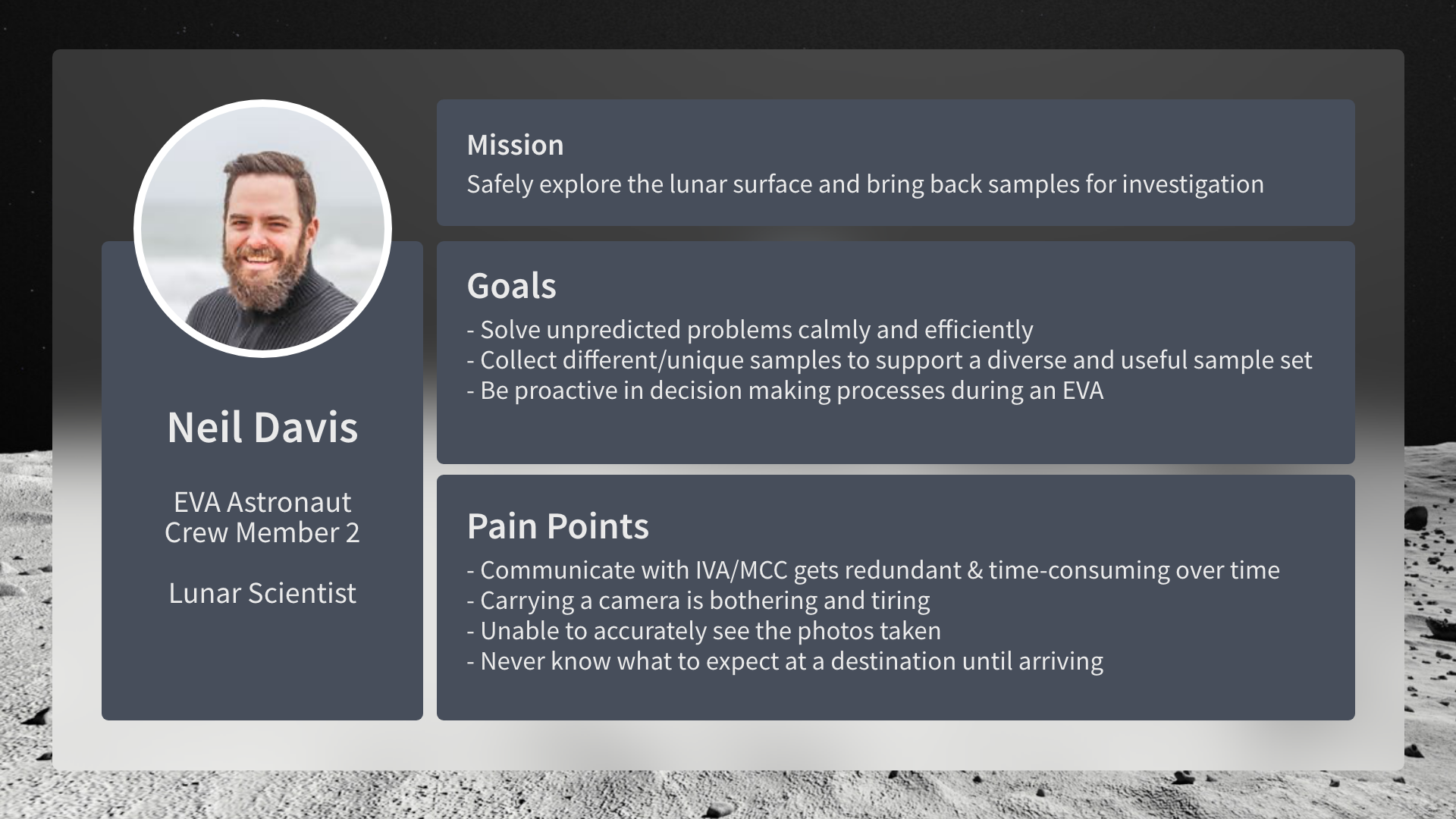

Personas

Based on the information we’ve gathered from our research, we created 2 EVA astronaut personas to understand their needs, experiences, behaviors and goals during EVA missions.

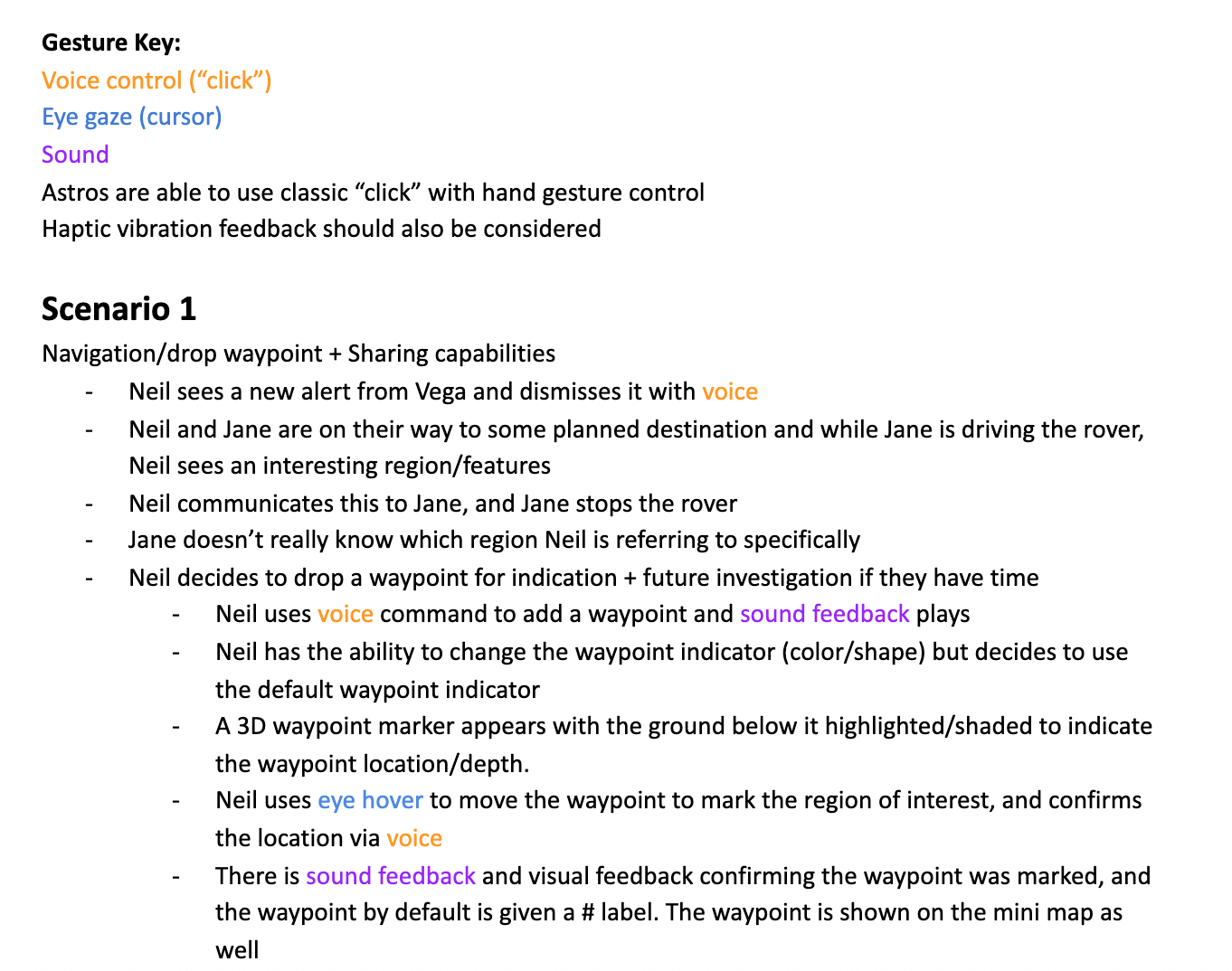

Scenario script

With Jane and Neil, our goal for the script was to flesh out how they would use the camera, documentation and navigation functions in realistic use cases. Additionally, we specified interactions such as voice control, eye gaze and sound feedback to plan for the design.

Interactions

Primary: voice and eye-tracking

Artificial intelligence VEGA is a personal assistant providing astronauts with informational needs during EVAs

Eye-tracking/eye gaze simulates a "cursor" to navigate the interface while using voice to make selections

Secondary: hand gestures

Anything doable with voice can also be done by clicking the interface

Feedback: visual, audio and haptic/vibration cues

Fallback: traditional methods such as paper

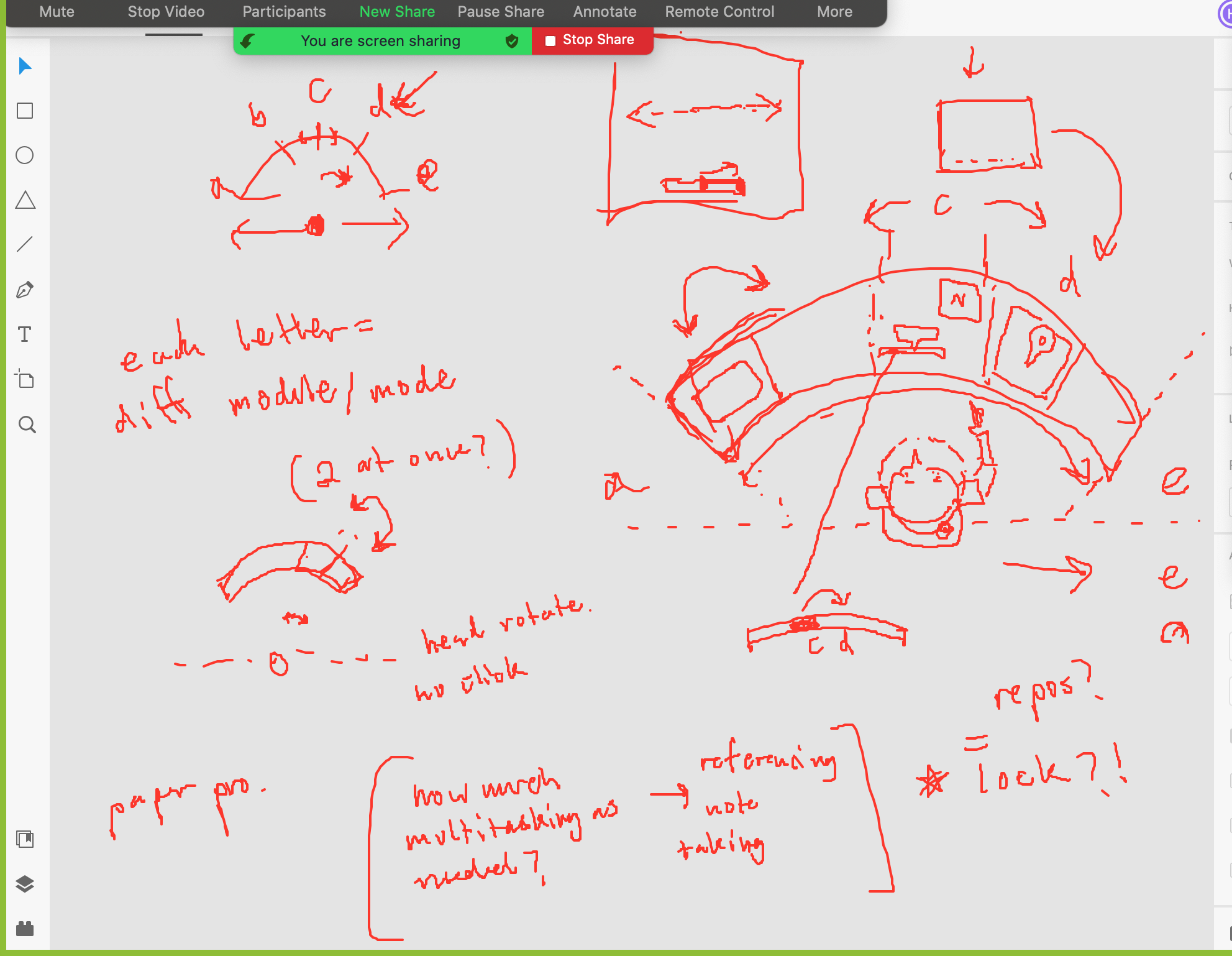

Design ideas & iterations

Testings

On paper and later on the Hololens 2. To test how far or close UI elements looked in perspective to the world and to validate if the locations of the elements and the color palette chosen still made sense.

Final product

Scenario 1

Jane and Neil are on a rover on their way to their mission destination. Neil asks Jane to stop driving and uses the drop waypoint function to mark an interesting spot. He shares it with Jane so she can see the spot he’s referring to.

Key design points

Waypoint markers

Sharing waypoints

Waypoint markers

Different waypoint types allow astronauts to establish their own organizational system

Waypoints are dropped on the lunar surface with eye-gaze (aim) and voice command (drop)

Sharing waypoints

Waypoints can be shared with crew members via voice

Vega (blue rings) will indicate a waypoint has been shared and lead the crew to the waypoint

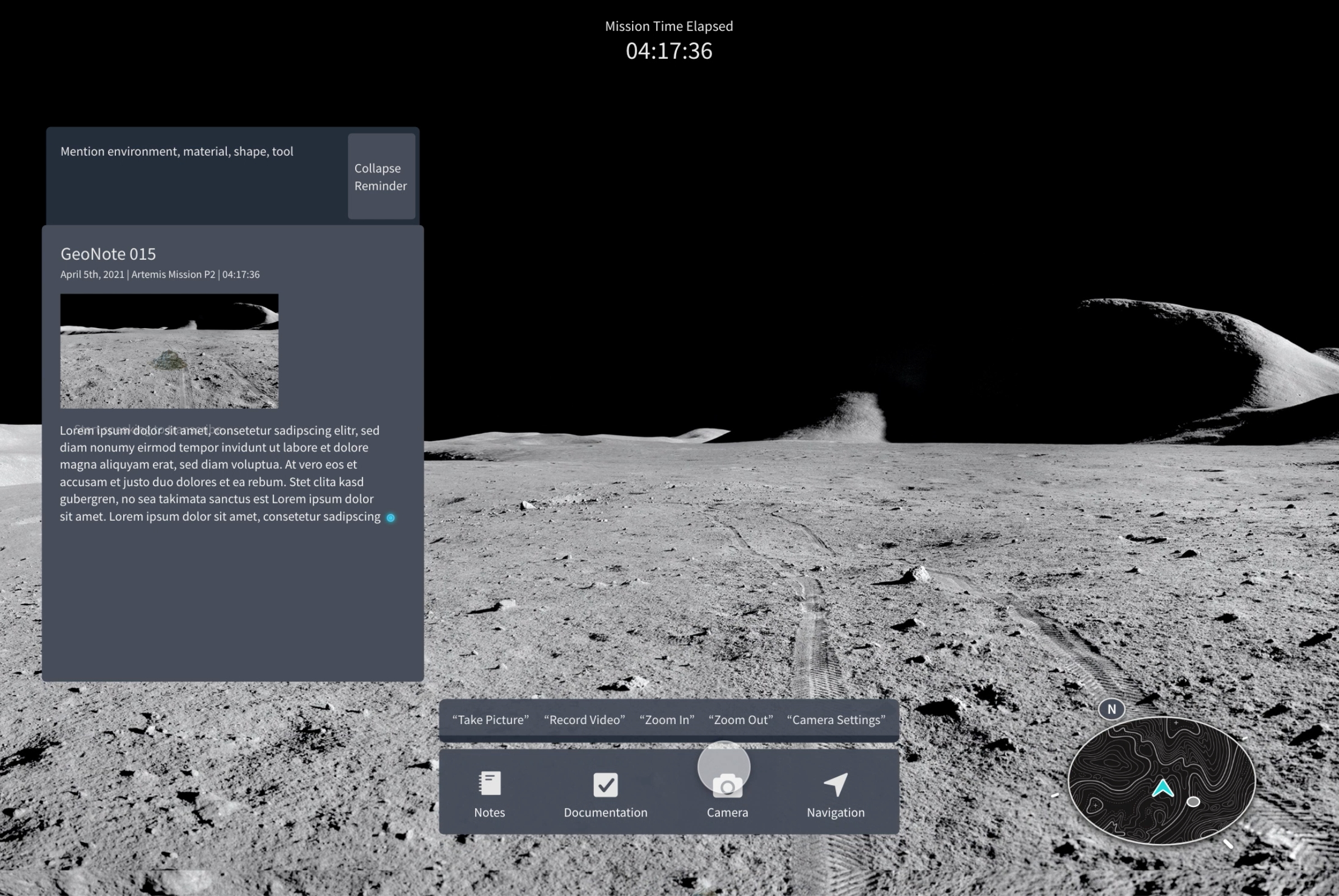

Scenario 2

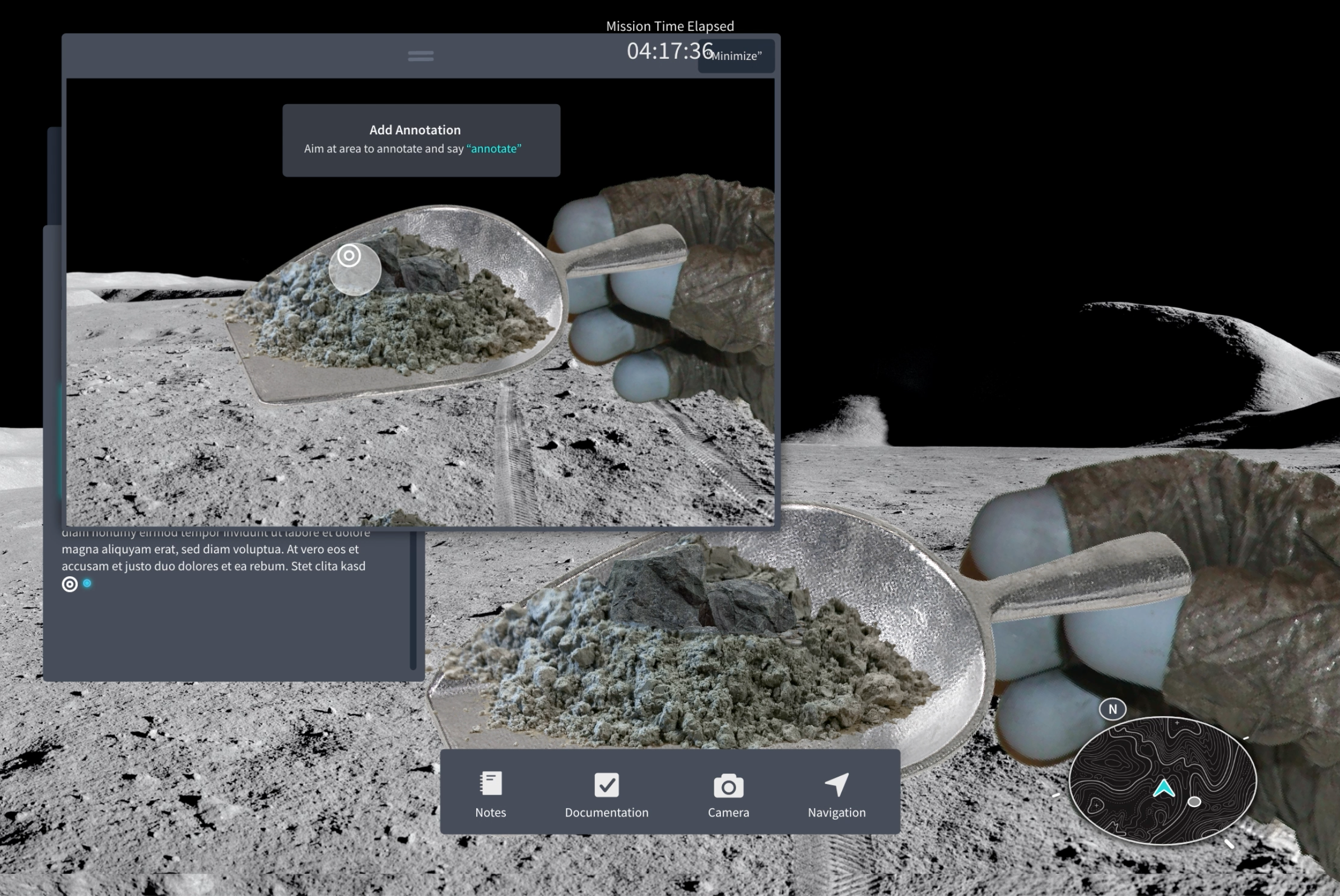

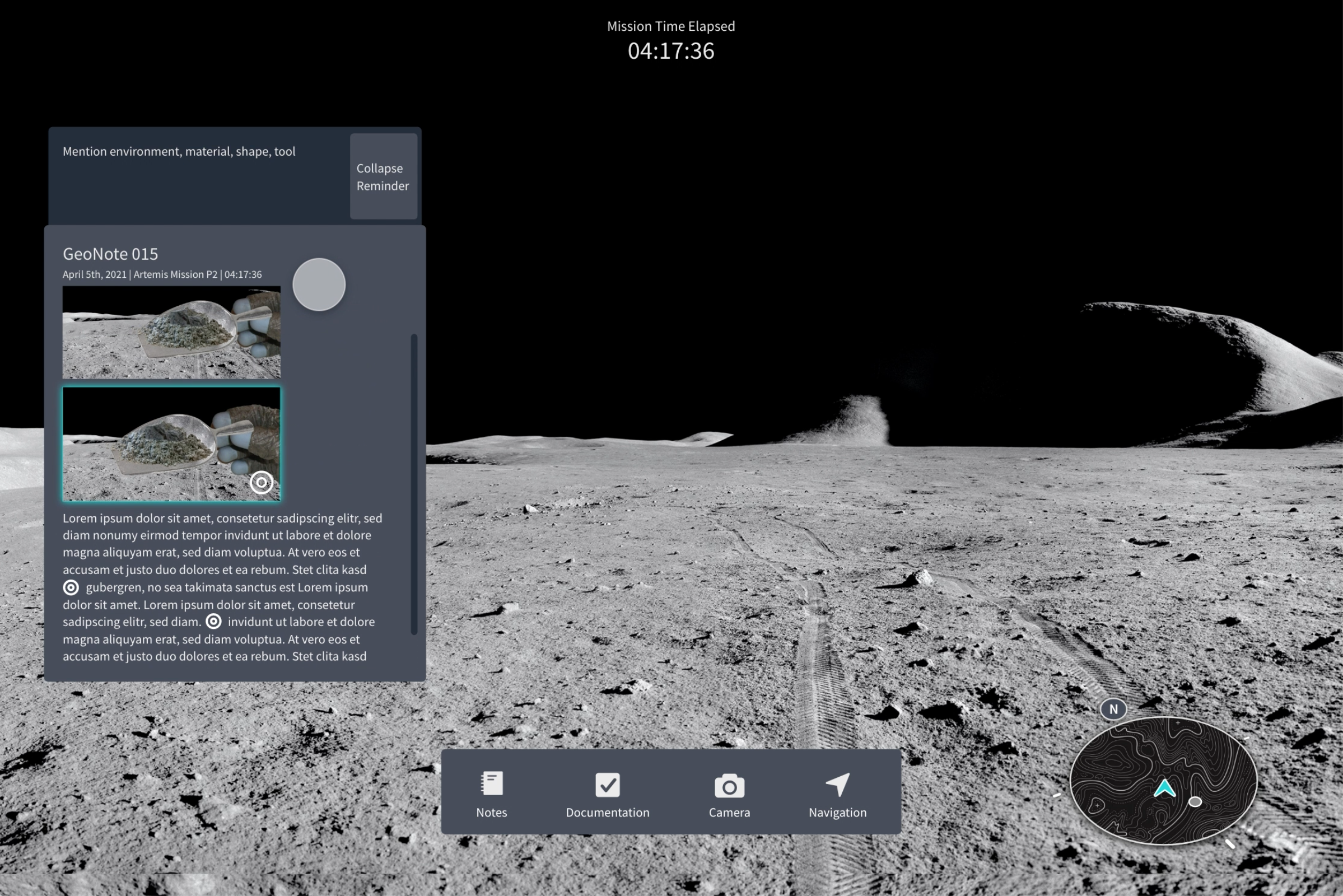

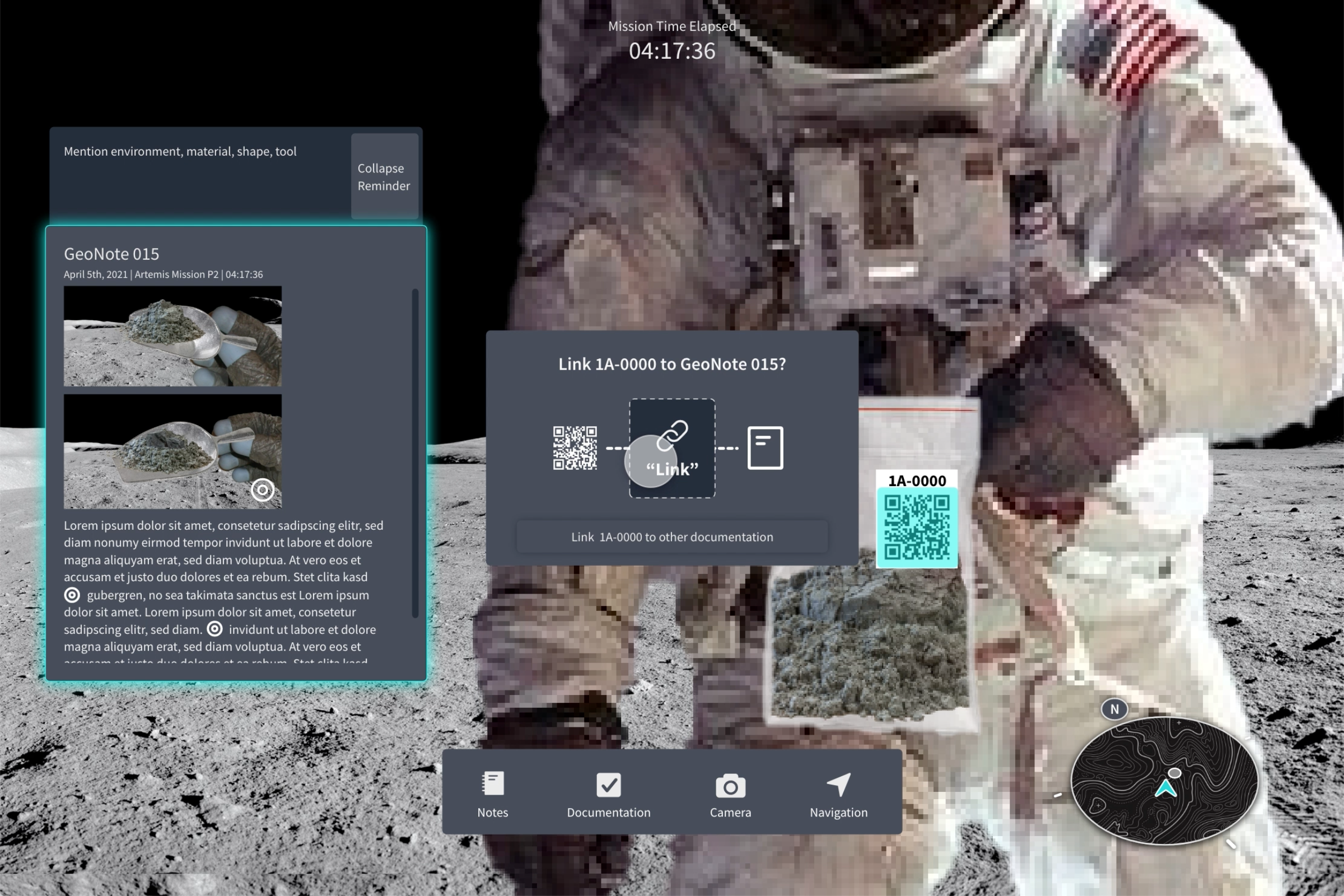

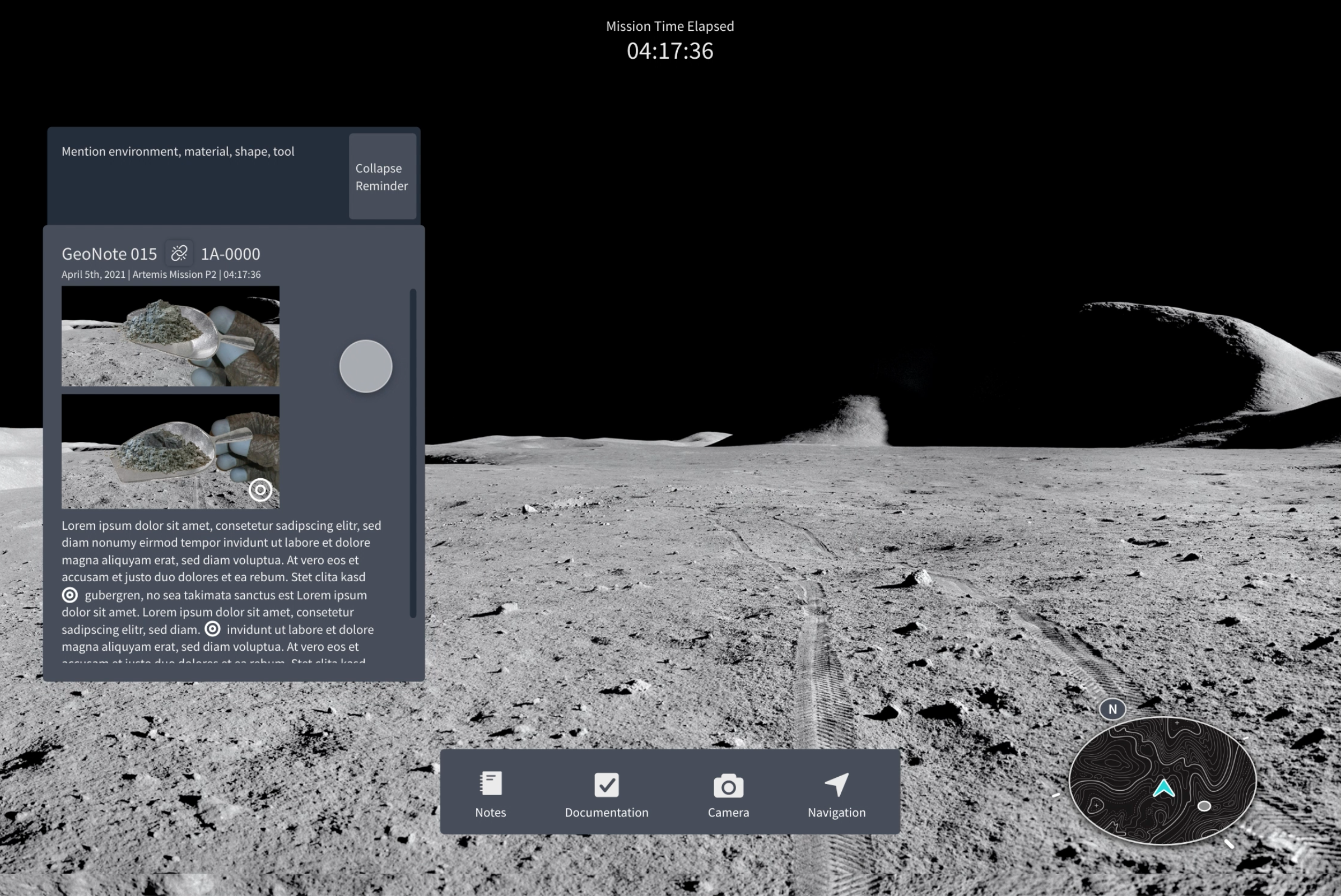

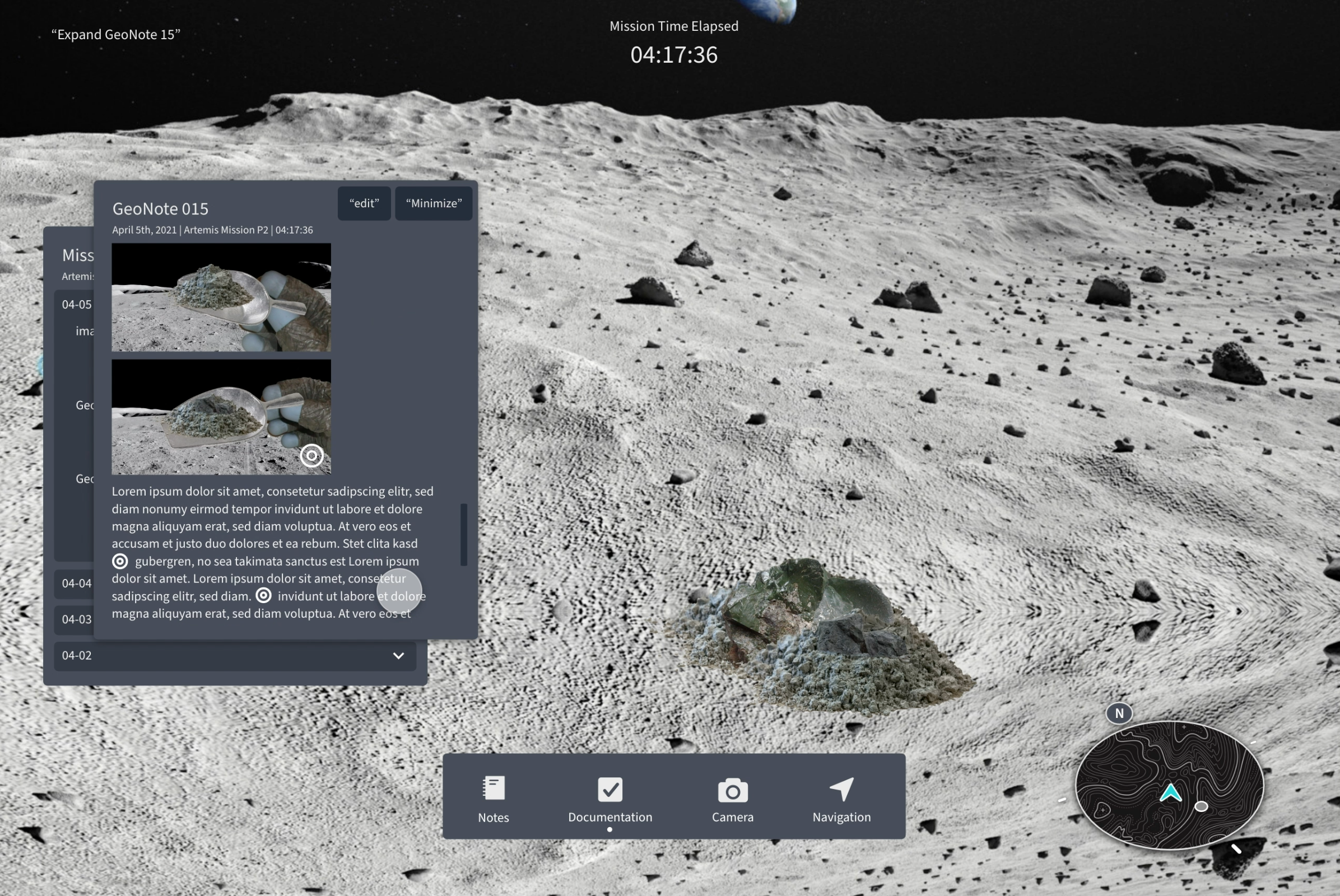

Jane and Neil decide to collect some samples. Jane goes through the process of creating the GeoNote, taking pictures, adjusting camera settings, making voice notes, making annotations on the images and linking the note to the sample bag they’re storing the sample in.

Key design points

GeoNote documentation (creation/modification)

Camera operation

Image annotation

Unique sample bags with QR codes

Linking GeoNotes with sample bags

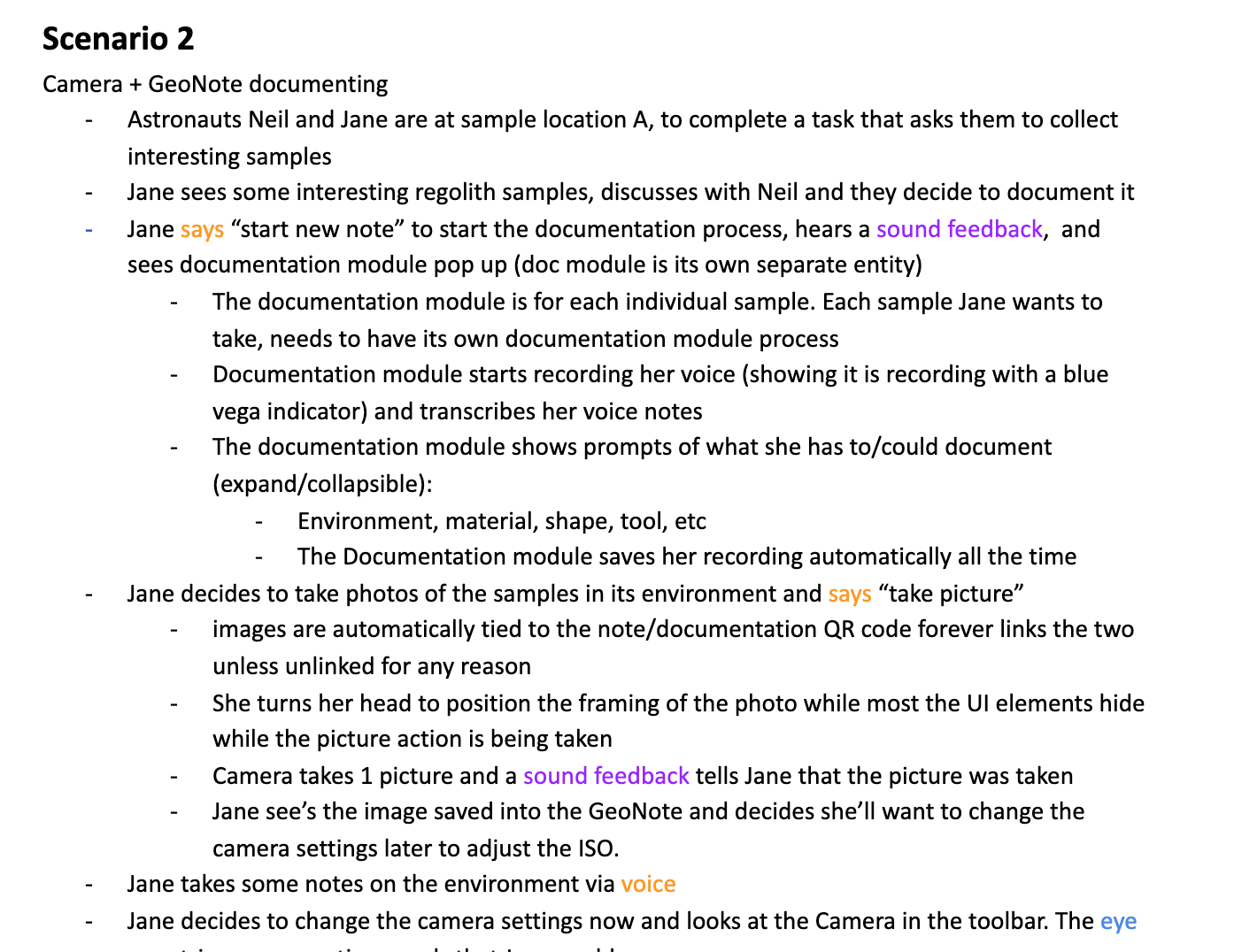

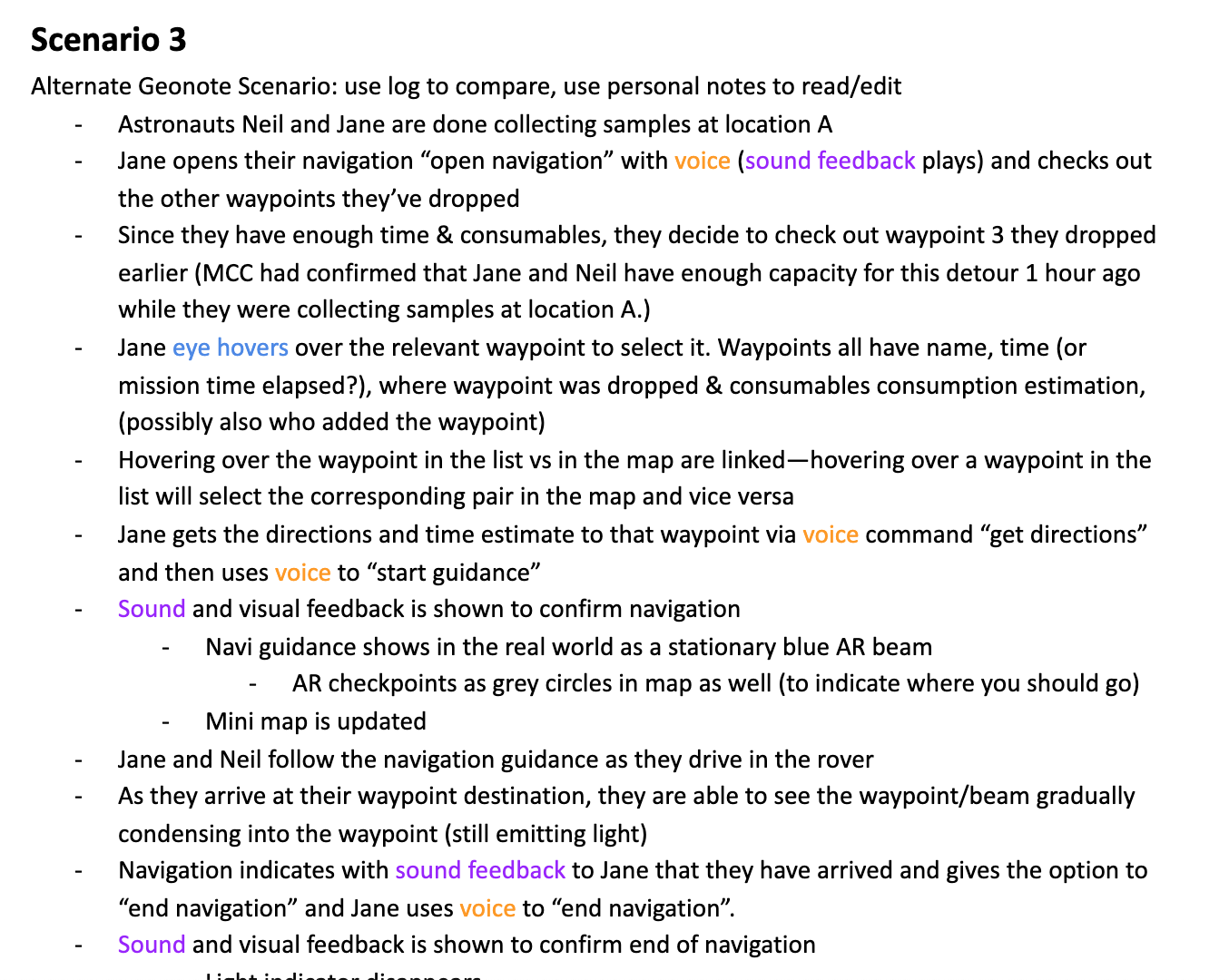

GeoNote documentation

A dismissable reminder sits on top of the GeoNote to remind astronauts of what they should document

Photos and voice notes (stored in form of transcription) saves into the GeoNote

GeoNotes are constantly “saving”. The crew does not need to manually save progress

Vega (blue dot) indicates where it’s active

Camera

Most UI elements will hide briefly while taking a picture for a clear view of the shot. The camera will also delay for a certain amount of time after it’s “opened” to allow for any final compositional adjustments

Suggested voice commands hover over toolbar with eye-gaze over Camera on the toolbar

Image annotation

The annotation icons are marked on the image with eye-gaze (aim) and voice command (mark)

The icon and annotations are recorded into the transcription to help the crew locate the notes

Images with annotations are marked with the annotation icon

Suggested voice commands hover over an image with eye-gaze

Sample bag

All bags have unique text/numbers for the crew to read and a QR code for the system to scan

Link

The link module is triggered with eye-gaze over the QR code

Items to be linked are highlighted in blue

After the link happens, GeoNote titles update with the bag number, which is also available for de-linking/re-linking

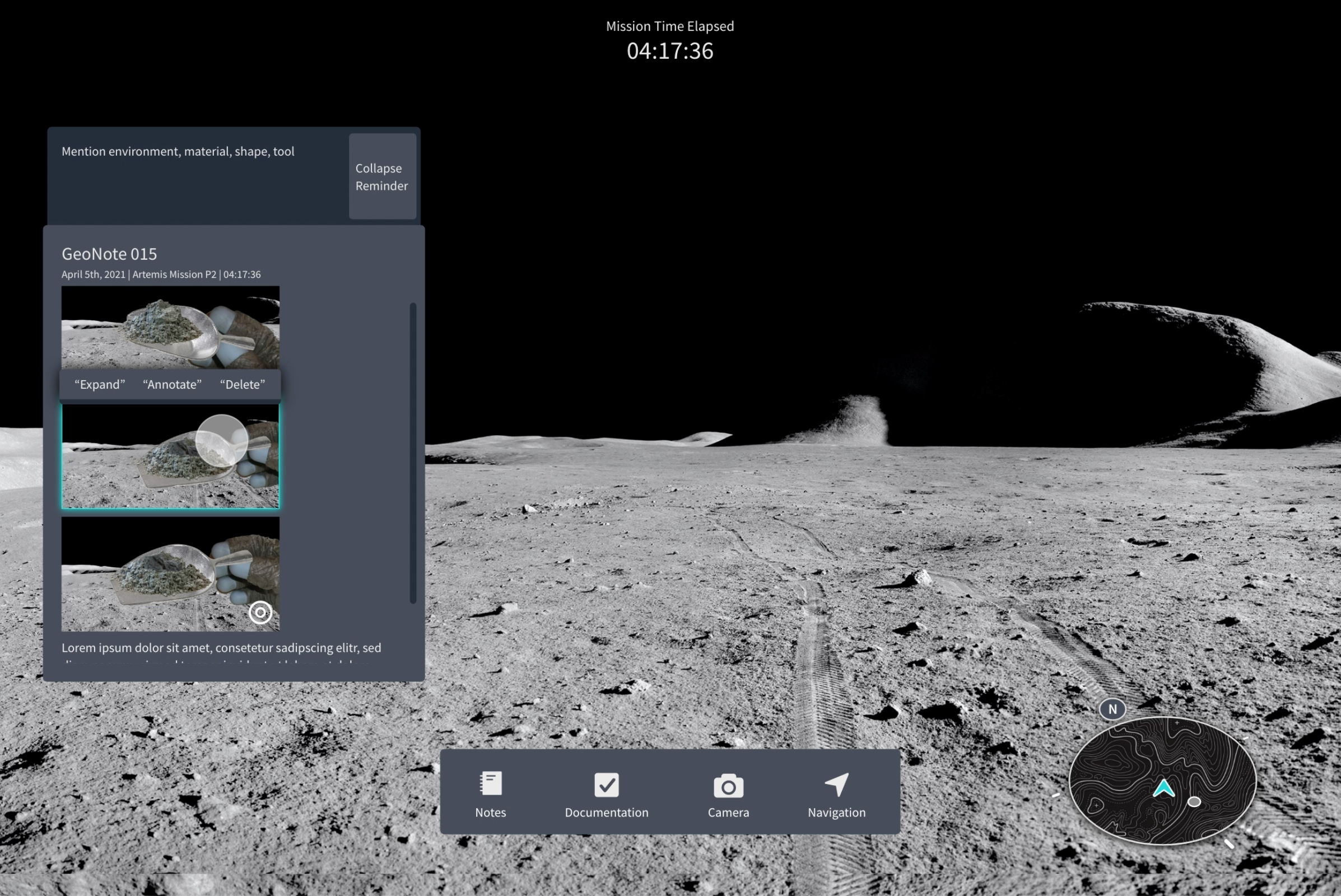

Scenario 3

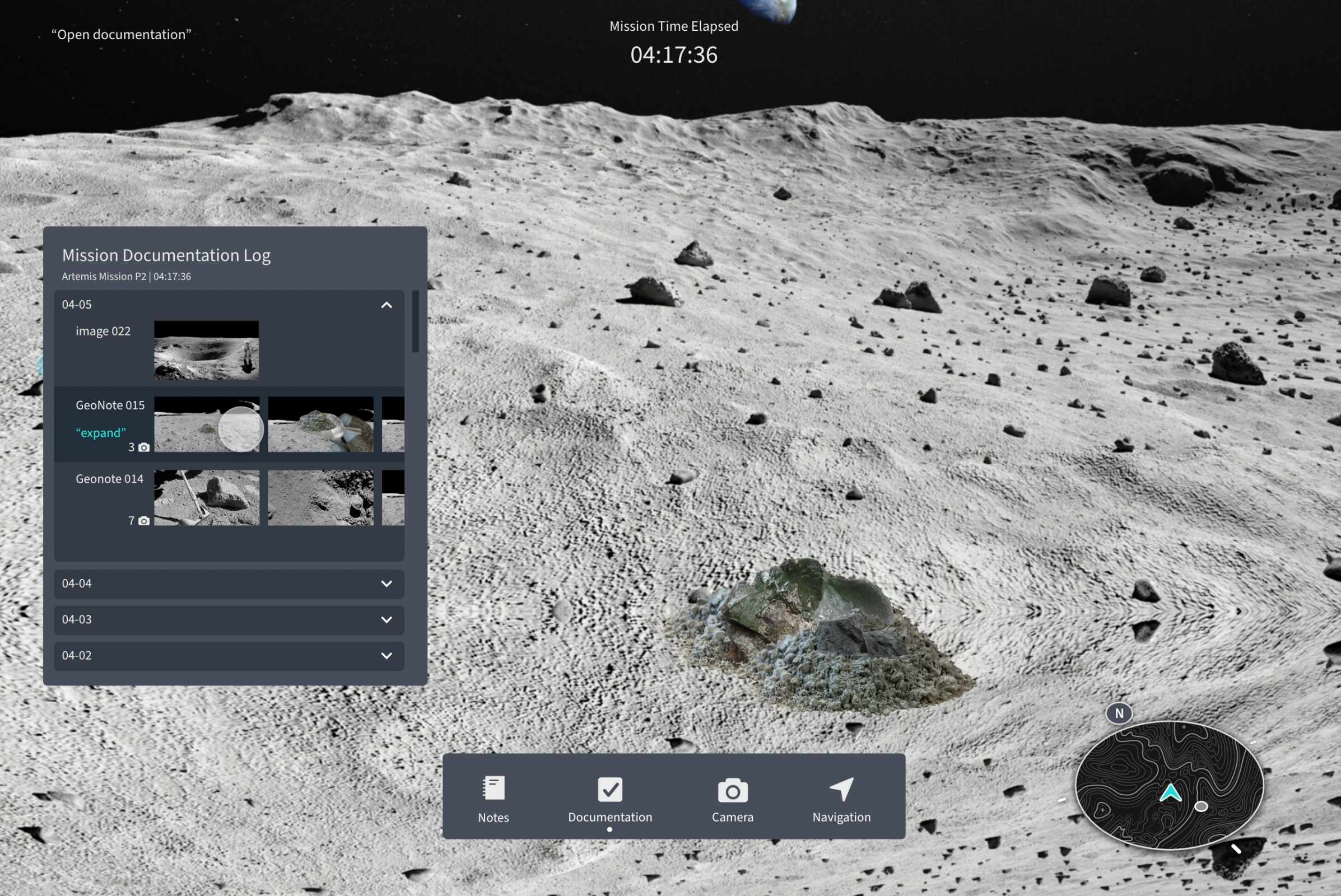

Jane and Neil navigate and arrive at the spot they marked in Scenario 1 and Jane opens documentation to compare sample information. She also takes some notes in her personal notes.

Key design points

Navigation

Review documentation

Personal notes

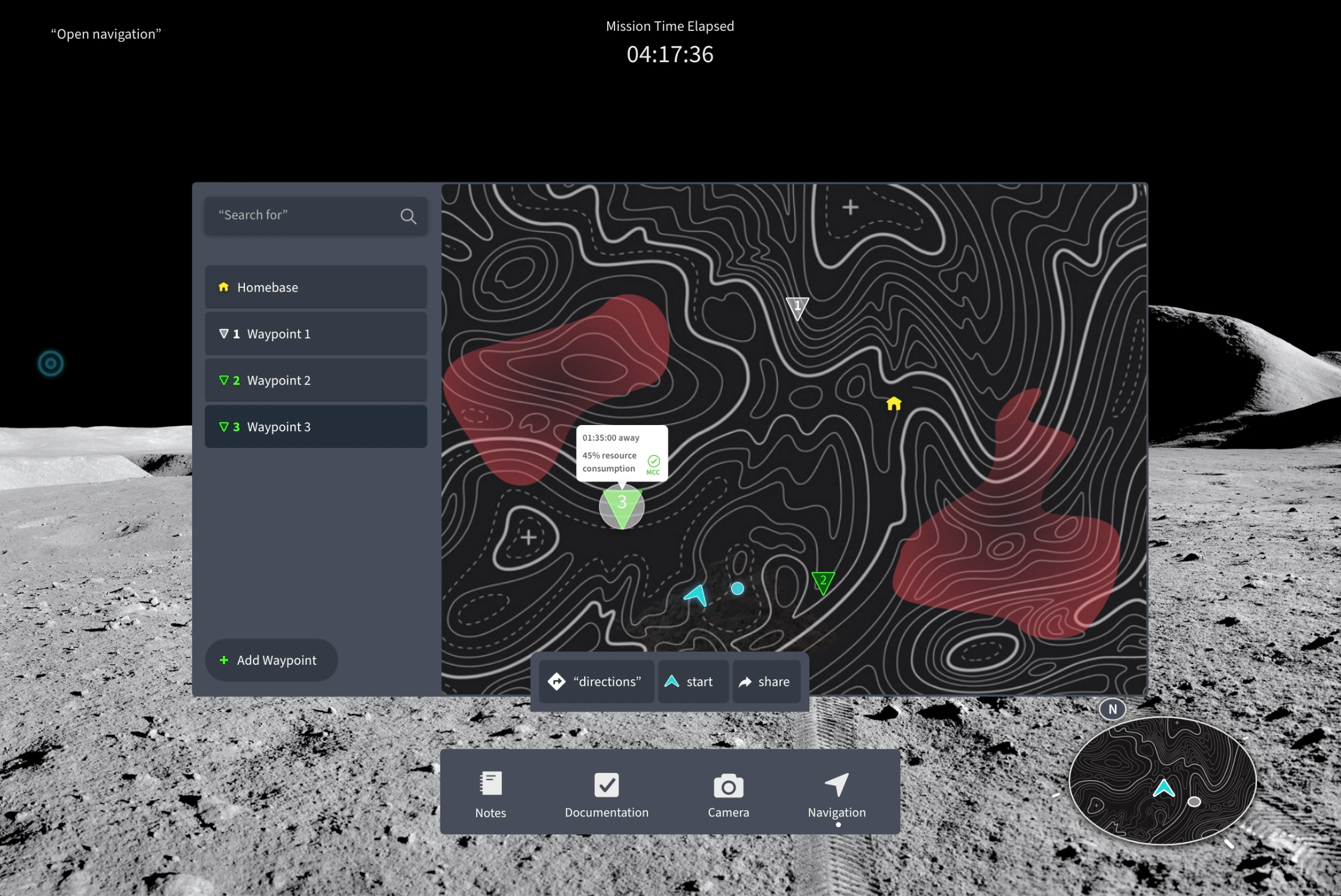

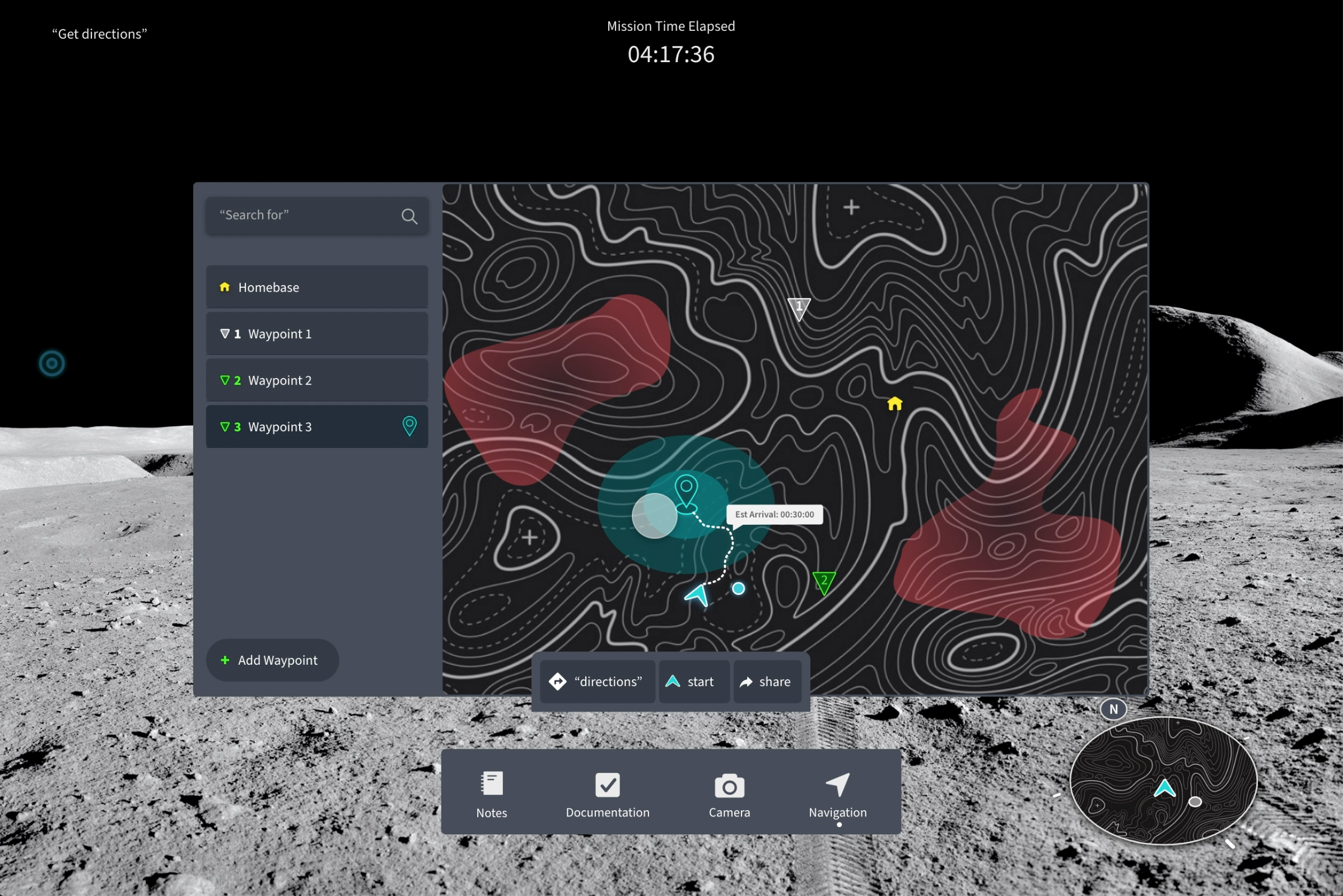

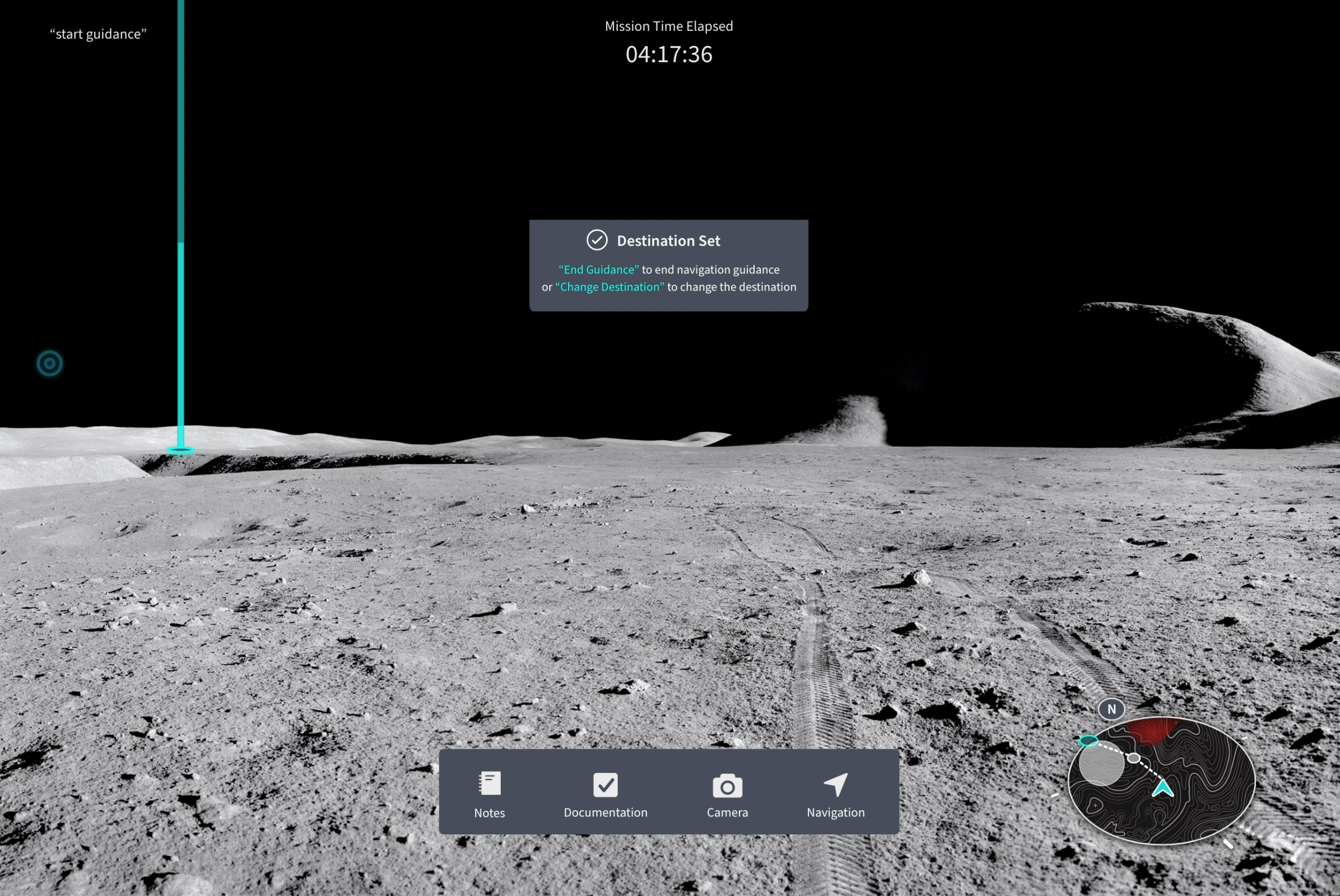

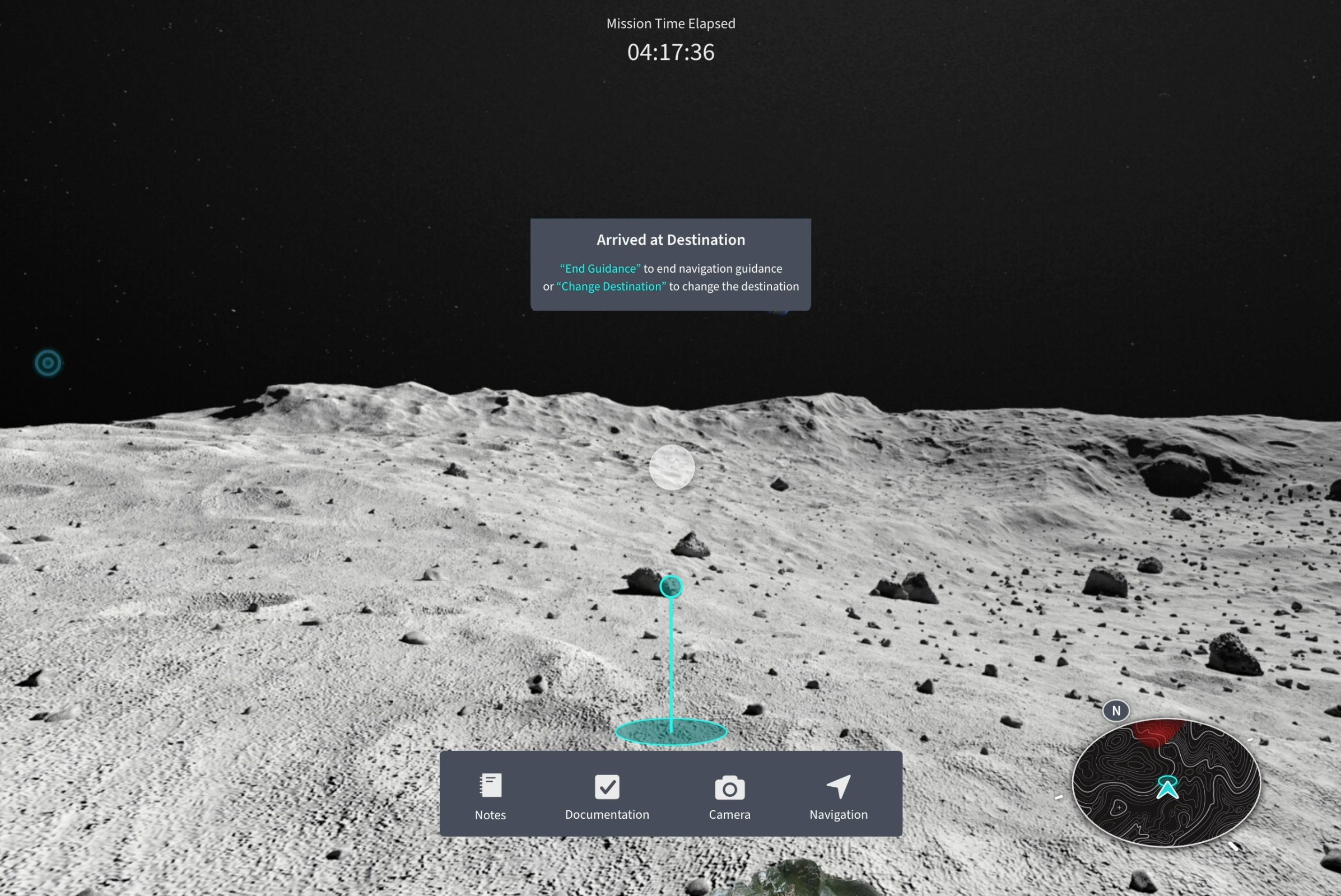

Navigation

In Navigation, the crew can view all the waypoints they’ve dropped with details on the availability to navigate there

When navigation starts, a light beam shows to indicate the destination and the mini-map changes to route while avoiding no-go zones

As the crew gets closer to the beam, the beam shortens and eventually ends as an indicator on the ground

Review documentation

Documentation stores all images and GeoNotes taken during EVA missions

Access to previous documentation allows the crew to compare and contrast samples before collection

Personal notes

Notes allow the crew to quickly jot down notes for themselves

Reflection

This year-long process took me on a challenging yet exciting journey of doing research and creating designs. My biggest takeaway is probably truly understanding the meaning of “research is an ongoing process”. Research doesn’t end when we start designing and in fact, the more we researched and found out, the more we kept moving from our designs, iterating them. It gives me a headache whenever I want to change my designs because I’ve read articles/papers or watched lectures/talks on new possible ways to interact with these new technologies. But knowing that the change will bring us even closer to our design goals always made me commit to taking that extra step.

I’m very proud of the final designs, my team, and what I have learned throughout the process. Not only have I practiced designing for interactions with voice and eye-gaze, but also touched a little upon sound design and learned the difficulty of balancing the right amount of visual and audio feedback. Aside from the process, the most accomplished feeling is when my team and I present. The follow-up questions people ask and the interest NASA scientists show are what made this year-long effort a truly rewarding one.

Next steps

Although the project has concluded, there is much more that can be done in terms of research and design. More user testing with either astronauts or general users would be extremely helpful in improving the interface, finding the most optimal feedback type and frequency, and evaluating the flow of interaction. Testing in controlled environments with devices like the Hololens or a HUD would be ideal in gaining accurate feedback.

〰️ the nerdy stuff 〰️

Duration: Jan 2020 - May 2021 (1 yr 4 mo)

Tools: Illustrator, XD, Figma, Hololens, Premiere Pro

Skills & Keywords: mixed reality (MR) design, voice user interface design, paper prototyping, interview protocol, interview, user testing, user flow, persona, hi-fi prototyping

Exhibitions: XR@Michigan 2021 Summit Student Showcase, UMSI Student Exposition - Spring 2021

Collaborators: Hope Mao, Nigel Lu